We are closely following the Ceph advancements targeting NVMe performance. We use Ceph as the hosted private cloud core of our Infrastructure as a Service Product. Our concern is, as is many long time fans of Ceph, is how will Ceph adapt to NVMe performance when its history has long been rooted in spinning media.

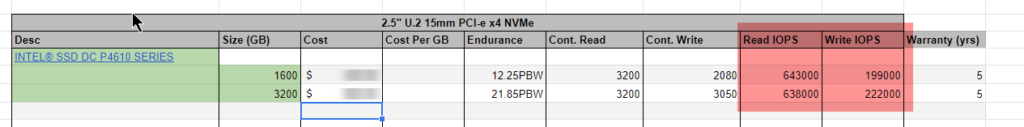

As an example, currently we use very high performance NVMes in almost all of our converged Ceph and OpenStack clusters. The raw IOPS can only be described as stunning. They are extremely fast, much faster than almost anything you can throw at it. See the specs below:

When we introduce Ceph as the HA storage backend, as many of you know, this places quite a bit of software between the CPUs and the Storage. While brilliant in stability, scaling, data integrity, and the list goes on, one thing Ceph isn’t brilliant with – giving the CPU access to all those IOPS.

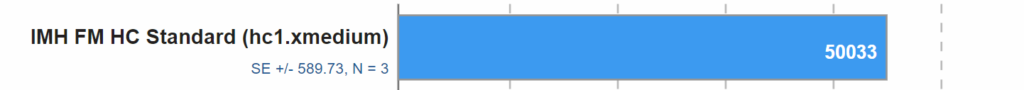

There are many reasons for this, both “on server” and in the network, but the net effect is after all of the things that Ceph has to do, the performance appears to be roughly on the scale of 1/10 of what the gear can actually do. Once we connect in from a VM to a very lightly loaded Ceph NVMe Cluster, this is what we see for Reads, for example.

For sure some modifications and tweaks can bring those numbers up. But it is so far different from what the gear can do, we evaluated this against other potential solutions. In particular, when you look at what NVMe over Fabric can do, it hands a very complex problem to the Ceph wizards.

For all of the team working on Ceph Crimson, we are all very excited and ready to help in any way we can.