Private Cloud as a service. Bare Metal as a service.

Modern cloud. Transparent costs. No overruns.

OpenMetal Starter Cloud

Launch your own hosted private cloud on a 3 server Cloud Core – all in 45 seconds.

On-Demand OpenStack

The #1 cloud management system you love, delivered right now, root and all.

Dedicated Bare Metal

Support demanding workloads such as ClickHouse, Kafka, Hadoop, Spark, Cassandra, and more.

Ceph Storage Clusters

Large scale storage clusters for bulk object storage plus Block and Network File System.

Working Together for Your Success

Your team is the kind of people we would dream of having on our own team. And the great thing about working with you is that it feels like they are part of our team. This quickly won over trust with us.

What is OpenMetal?

OpenMetal is a leading provider of open source private cloud as a service and dedicated servers as a service. The strengths of public cloud, private cloud, and bare metal have been fused into an alternative cloud platform, powered by OpenStack, Ceph, and bare metal automation.

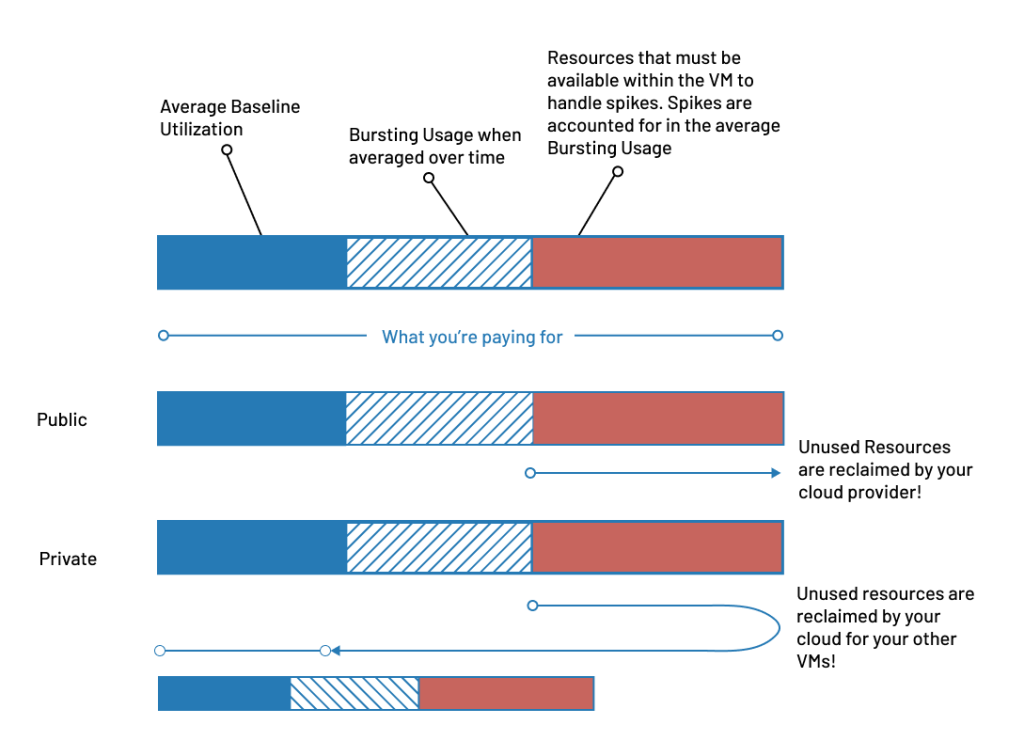

Advantages Over Traditional Public Cloud

- Up to 3.5x more efficient than public cloud

- Set your hardware budget, no surprise bills

- Fair egress costs

Advantages Over Traditional Private Cloud

- Instant provisioning, scale up and down easily

- No hardware management

- No license costs

| Private | Public | |

|---|---|---|---|

| Tenant-First Goals | |||

| Private Security Model | |||

| Root Control | |||

| Dedicated to You | |||

| Tune to Workloads | |||

| Custom Flavors | |||

| Fair Egress Costs | |||

| No Surprise Billing | |||

| 4-5 Year Agreements | |||

| 1-3 Year Agreements | |||

| Pay as You Go | |||

| Launch Instantly | |||

| Self Service Purchasing | |||

| Rapid Scaling | |||

| Managed Hardware | |||

| Rapid PoCs | |||

| Easy Multi-Region |

Explore cloud features, check cloud core pricing, or come meet us!

Best-In-Class Cloud Technology

The tech you love, the options you want, the API-first approach you need.

All from people that believe in the power of open source

Open Source at the Core

Discover the driving motivation behind the development of OpenMetal and its commitment to support the growth and advancement of open source and its communities.

Innovation On Demand

Learn how to harness the power of OpenMetal to build and deploy enterprise-level, OpenStack private clouds for companies of any size, in less than a minute.

Powered by Open Source

OpenMetal is a Silver Member of the OpenInfra Foundation. The OpenMetal Cloud Core is powered by OpenStack, the #1 open source cloud infrastructure platform; plus Ceph, the #1 open source storage system in the world. OpenStack Private Clouds, hosted private clouds, managed private clouds, and storage clusters are all proudly run on open source. You can be confident you’re getting the right cloud IaaS solution with the support of a leading OpenStack provider.