Recently we moved our new “On-Demand OpenStack” private clouds to being backed by Ceph Octopus. There are a lot of reasons for this, but the largest was the potential for greater performance with Octopus.

There was already a huge leap forward compared to Filestore with BlueStore stable in the Luminous release. That being said, having BlueStore and knowing how to tune it for specific application is a learning process for sure.

We are very excited about the benefits we are seeing for our Flex Metal private clouds based on our research and tests. Octopus really is a great new release, but we found it a bit hard to find really good tutorials and presentations on both BlueStore and new changes specifically in Ceph Octopus. So, here is some of what we found. Hopefully, it is useful and motivates you to upgrade to Octopus.

Drive Performance and Ceph Octopus with BlueStore

First, though BlueStore is a great upgrade for everyone, some of the key reasons were to recognize the changes NVMe drives bring and to make changes to Ceph prior to full NVMe adoption.

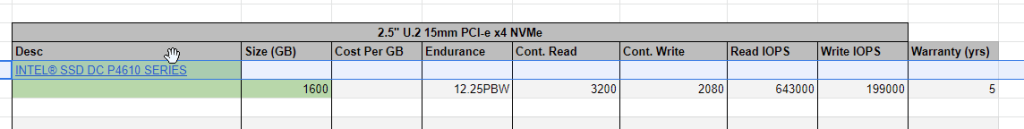

The bulk of our private cloud options are using Intel DC P4610 NVMe drives for our hyper-converged options. When you are using NVMe, there are many considerations that are distinct from spinners or slower SATA SSDs.

Yes, that is the specs – Read IOPs of 643,000 and Write of 199,000. Sure, there are many caveats to getting that performance, but those are large numbers even if the full performance is blocked by something else in the system.

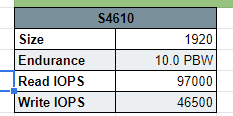

SATA SSDs were their own revolution and still remain a critical and cost-effective part of high performing systems. We use them in our smallest Hyper-Converged Cloud and they are a great combination of cost, performance, and size. But the I/O numbers are just in a different class.